By: Richard W. Sharp & Patrick W. Zimmerman

Big Tech makes a mountain of money by collecting as much personal data about as many people as possible.

Mainly, this surveillance is used to identify the personal habits of people and sell them as much as possible, either directly through channels controlled by the corporation in question (e.g., dynamic pricing on Uber) or indirectly, by using this information to facilitate third-party advertising, à la Facebook and Google. The business plans of these companies, which have been very successful from the shareholders’ perspective, amount to running a mass surveillance operation for private gain.

The resulting monopolies aren’t just an issue of market efficiency, however. The real problem lies in how they filter information and free speech.

Data gathering and filtering is typically justified by claiming that there is an improved customer experience.1 Certainly search results that are aware of the individual conducting the search are likely to be more relevant to that person and speed them on their way. Massive social networks like Facebook have changed the way people communicate and stay in contact with each other, in part by helping users sift through the massive amounts of information available on these sites and presenting the most relevant in each user’s personal stream. Indeed, the Internet could hardly function without intelligent filters to help people find what they are looking for, and such filters are improved by having wide access to the personal habits of users.

American big tech companies have also argued that the public should trust them with our data because they “share the values we have.” However, when the public expresses those values in a way that threatens the business model, such as a Washington State election law that requires advertisers to “provide the public with details… concerning the precise funding and reach of local political messaging,” the typical response has been “we’ll see you in court.” It seems that the defenders of our values also see themselves as the definers of our values. In this case, the law was clear and Facebook and Google settled with the state by agreeing to stop selling political ads rather than meet transparency requirements. They took their ball and went home rather than engage in the public sphere.

Information gathering itself, conducted under any circumstances, is double edged. Tech companies have solved a massive signal-to-noise problem, in both search and discoverability, but when the goals of the people designing the filters are explicitly different from those who need to use them, there is a serious risk of harm to the public. We’re not exactly living out the GATTACA nightmare, but data breaches and identity theft; denial of advertisers’, publishers’, artists’, dissenters’, and others’ access to markets; and the manipulation of public opinion are all examples of actual significant harms that have come to pass from monetizing surveillance. It might be argued that the problem is that the companies involved have failed to be careful enough with our data, and that market forces can correct for this by exposing a company to reputational and financial losses through bad publicity and litigation. Indeed, in the case of data breaches, this may play a role. However, denial of access and the manipulation of public opinion stemmed not from exceptions to the business plan, but as a natural byproduct of the data-mining business model.

The Internet and social media are public forums by the simple fact that a significant portion of the public participate in them to communicate and to transact. Big Tech has declared itself the centralized authority for deciding what speech and actions are permissible in this public forum. Misinformation and fake news persist because clicks and money and investors have no stake in facts. But the public does. Witness our current state of affairs.

The Case for Regulation

It’s clear that there’s a problem. So, why is regulation an appealing solution vs. a market based approach? You may have noticed that recent headlines haven’t exactly been highlighting the stunning efficacy of representative forms of government. How is getting Congress and the executive involved going to help?

Simply put, one of the essential purposes and roles of government is to protect its citizens from harm. This is true of government at all levels, from food safety inspections of local restaurants to nationally-run air traffic control systems. It is one of the core points of even having a state, a key stipulation of the social contract. When governments fail at this task (for example, in monitoring the safety of drinking water to the people of Flint, Michigan), it is considered a fundamental failure of the system.

Of course, just about anything can cause harm to some degree, so there is a threshold to meet before regulation is put in place. Paper cuts really hurt, but that new ream of printer paper doesn’t come packaged with gloves. On the other hand, cars kill people when they aren’t operated safely and maintained regularly, or are driven on unsafe roads. There is no serious dispute that we must obtain a license, insurance, register a car after showing that it meets fuel economy standards and passes a safety inspection, and pay a fuel tax to fund road construction. Regulation enables us to enjoy the advantages of driving while mitigating the serious harm that can come from it.

Often, and especially among technologists, saying the word “regulation” elicits a Pavlovian response: “but it will stifle innovation!” However, that argument isn’t consistent with the record. While automobiles are heavily regulated, man of us may not need to own another car thanks to ride sharing and rental services, and if we do own another car, we’ll have the choice of electric or gas, and it might even be able to drive itself. It would seem that innovation in the automobile sector has not dried up.

Likewise, Microsoft was not boxed away following the settlement with the DOJ that ended its 2001 antitrust case. The company not only survived on the strength of its core business, but succeeded in entering the gaming market that same year and created one of the two major cloud platforms. Whatever place you reserve in your heart for buzzwords, cloud technology did not exist in its modern form when Microsoft settled its antitrust suit,2 and the company has played a leading role in this new and highly successful market.

Struggling victim of regulation: Microsoft. Or not so much.

Given the size and dominance of the major tech firms, the topic of again applying an antitrust approach to the problem is starting to received serious consideration. Experts have noted the “scale and influence” of these firms and considered how to take a modern approach to antitrust to ensure that the result will not stifle innovation. An article on the topic, published by MIT’s Sloan School of Management, considers today’s tech landscape from under the shadow of the Microsoft antitrust case, but it does not consider the stifling of the public’s rights to freedom of expression, or of the potential harm of ubiquitous surveillance. This is a common theme in most of the arguments for or against regulation.

Going a step further, a number of the big tech players, including Amazon, Facebook, and Google actually publicly support regulation of technology. These companies and others banded together to support net neutrality, arguing for regulation to defend innovation. Equal access to the infrastructure of the Internet, it was argued, is necessary for novel technology products to ever see the light of day. In 2015, the FCC backed net neutrality by reaching a decision to regulate broadband internet as a utility, arguing that the move was necessary “to protect innovators and consumers’ and preserve the Internet’s role as a ‘core of free expression and democratic principles.“3

The strongest existing regulation of Big Tech’s proclivity for hoovering up the details of our daily lives comes from across the pond. Europe’s General Data Protection Regulation (click here for the executive summary in verse; for the less entertaining but more legally binding version, click here) gives control of one’s personal data to the individual and went into effect in 2018. The jury is still out on its long-term impact on technology, surveillance practices, and the public.

The case for regulation is built upon a balance between benefits derived from and harm caused by a technology or practice. The typical argument against regulation is that it will “stifle innovation,” but that claim, which is dubious, distracts us from a more significant issue. Rather than the health of the market, a greater risk when the gatekeepers of the Internet amass a hoard of personal information in order to refine content filters without transparency or over-site is the dilution of the public’s right of free expression. The citizen’s constitutional rights must outweigh a corporation’s desire to be unburdened by regulation. The First Amendment is not superseded by Facebook’s Terms of Service and its shareholder’s4 desire to profit.

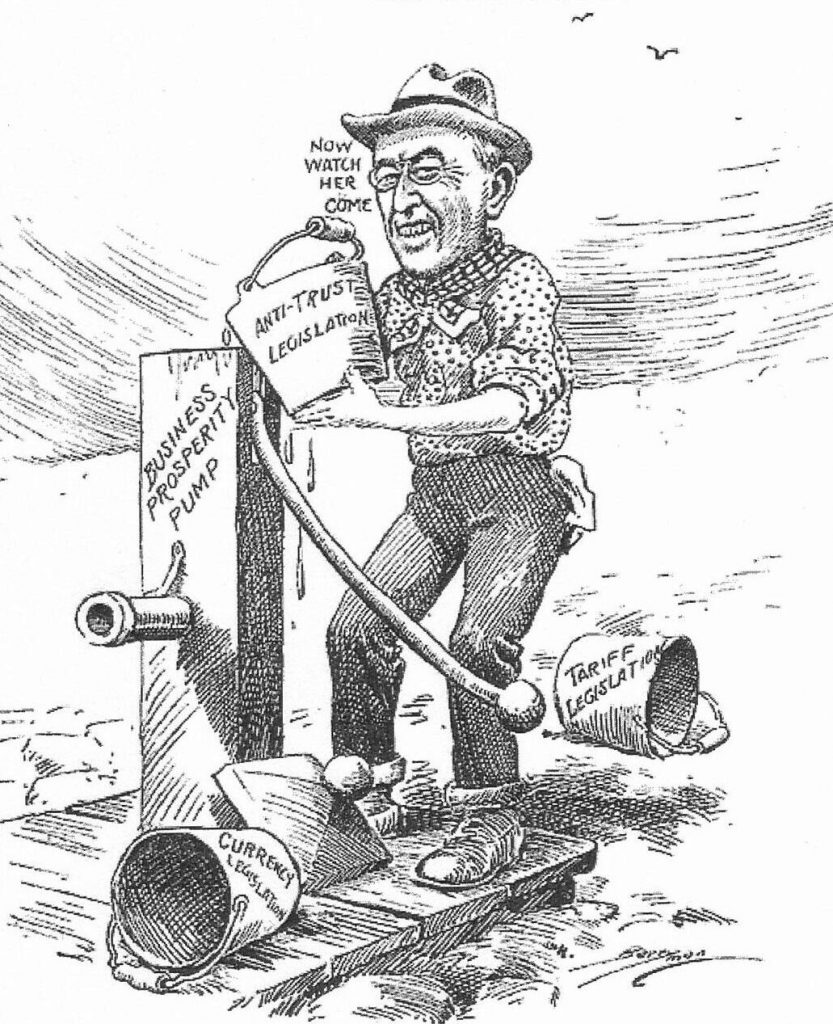

Anti-trust legislation was once seen as the key to prosperity, not a hindrance.

An exploratory project

So now what? How do we progress toward sensible regulation that protects the rights of the public while allowing that same public to continue to enjoy the benefits of online communication derived from search engines, social media, and even advertising platforms?

Initially, we are proposing a pilot study, with the goals of defining the scope of the problem and digging into potential source bases. Following that initial exploratory work, we plan to launch a longer-term study supplementing those archival and observational sources with ethnographic and experimental lines of inquiry.

Since the object of study is public discourse and free speech, the first step will be to try and quantify how much speech is happening on large, consolidated social media and search platforms. That is to say, how often is Facebook or Google the gatekeeper for our access to information and public debate? To what extent are they the screen through which we see and understand our world?5

We don’t actually know the answer to that question. And most likely, neither do you. That’s a problem, and we take as axiomatic that the public has a right and an obligation to determine the practices by which information is filtered for its consumption.

Ok, maybe a little too literal. But you get the idea.

What this means in practical terms is that this study is going to focus primarily on American search providers, social networks, and their advertising networks. In short, we’re looking principally at the places through which traffic flows to news and reference sites, the mechanisms through which citizens who wish to be informed about, say, the past voting record of newly-announced Presidential candidate Kirsten Gillibrand discover that information. For now, that means that we’re ignoring many large tech firms, such as major players in the gig economy like Uber. While the company certainly deserves a lot of scrutiny, they don’t filter communication or information in the same way. Similarly, even though we are interested in massive data gathering, we are not focused on surveillance by the NSA. While secretive, the NSA is nevertheless a public organization and its practices can be checked by the public at the polls and in Congress. While we recognize the potential for harm from indiscriminate surveillance of personally identifiable information by all sorts of entities, that is a different study. One that we may turn to in the future.

The pilot study really boils down to a look at who controls how much access to information, and how do they do it? We’re going to initially focus on the following companies:

- Amazon (specifically the publishing and sale of books and ebooks)

- Apple (specifically the App Store and iTunes)

- Facebook (and its ad network, as well as its subsidiaries Instagram and WhatsApp)

- Google (and subsidiary ad networks AdWords, AdSense, and DoubleClick)

- Microsoft (specifically, Bing and Bing Ads)

- Twitter (and Twitter Ads)

- Yahoo

Person of Interest, Mark Zuckerberg.

The central questions of the study, both the pilot and the long-term project, will be:

- How much are people dependent on these filters to make use of the Internet?

- In quantifiable terms, what percentage of searches are controlled by Google, Bing, and Yahoo? What percentage of clickthroughs (also known as referral traffic) to major sources of news (newspapers, line services like AP, etc) and reference works (Wikipedia, the OED, academic journals) originate come from sources controlled by these companies?

- What concrete and measurable harm has come from the growth of information monopolies?

- What examples are there of journalists, artists, communities, non-profit organizations, and the like being denied publication (or having it limited)? How many legitimate accounts were shut down, and what criteria was used to determine bad actors from good? How often have these companies worked for political actors, either willingly (Google customizing a censored search portal to gain access to the Chinese market) or unwittingly (Facebook and Twitter have been clearly proven to be popular platforms for misinformation campaigns of various sorts, both foreign and domestic)?

Conclusion

Big tech has trouble envisioning a world that does not include centralized control of communication. When asked in a 2018 discussion with Recode to respond to the call to break up the big tech firms, Mark Zuckerberg revealed his take on this theme,

“You can bet that if the government hears word that it’s election interference or terrorism, I don’t think Chinese companies are going to wanna cooperate as much and try to aid the national interest there”

after all, China

“[does] not share the values that we have.”

You’ll notice that it seems that only a state sponsored tech monopoly could fill the void following the breakup of private tech firms. Nothing but a central authority is considered.6 You’ll also excuse us if we can’t remember the point at which we the people decided that our benevolent tech overlords should be empowered to defend our national interests because they exhibit our values. Values that evidently include hiring PR firms to anonymously discredit your critics such as Facebook did in response to Freedom From Facebook, a group dedicated to taking back the power Facebook wields over “our lives and democracy.”7

Search, social media, publishers, and advertisers do a real service for the public. They vastly increase the means and reach of communication between individuals and communities. The speed and reach of ideas would be severely limited without them, and it is right to consider the unintended side-effects that regulation of these organizations might have. But too often focus is drawn to arguments about the potential burden of regulation on business and market efficiency, and not often enough does the discussion emphasize the real harm caused by private control of expression for profit.

This isn’t a market efficiency problem and the answer isn’t to abandon the tech. It’s a free speech problem, and the solution must be decided by the public.

Notes:

1 Very Big Brothers Karamazov of them: they tell us they do it for our own good, because we want them to.^

2 Microsoft Azure launched in 2010 and industry leader Amazon’s AWS launched in 2006.^

3 The New York Times article also notes that “Ajit Pai, a Republican commissioner, said the rules were government meddling in a vibrant, competitive market and were likely to deter investment, undermine innovation and ultimately harm consumers.” Mr. Pai is now chairman of the FCC and has repealed net neutrality after long and thoughtful deliberation and definitely not because he’s a partisan hack.^

4 Oops, we meant “shareholders'”. Plural.^

5 Like Carlo Ginzburg’s Early Modern Italian miller, we cannot interpret the world except as mediated by the filters through which we gather information. Now let’s just hope it doesn’t get us burned at the stake like it did Menocchio. Carlo Ginzburg, The Cheese and the Worms: The Cosmos of a Sixteenth-Century Miller, trans. John & Anne Tedeschi (New York & London: Johns Hopkins University Press, 1980).^

6 Wikipedia, Mozilla, Github, Tor, and friends might be able to suggest some workable alternatives.^

7 Freedom From Facebook is not transparent about its financing, so its motives are unclear, but even confirmation of the worst could hardly excuse Facebook’s behavior.^

No Comments on "What is the harm in privatizing the surveillance state?"